In the first post of this series we explained the difference between some of the common buzzwords found in Machine Learning, such as Artificial Intelligence, Supervised Learning, Unsupervised learning, Deep Learning etc. In this post, while we will certainly throw a few more buzzwords into the mix, we are going to explore some common machine learning techniques in a bit more detail and the types of problems that they are best suited to solving.

At the simplest level, machine learning systems try to predict a value for something. To perform that prediction, we need 3 things:

- A way of describing the subject of our prediction,

- A question that we want to answer, and,

- An algorithm that can take the description and provide an answer to our question.

Which Features do I care about?

In machine learning terminology, the way that we tell our system about the subject of our question is by using something called a “feature vector”. That sounds a bit too much like maths for this post but you have no doubt heard the phrase “if it looks like a duck, walks like a duck and sounds like a duck, then it’s a duck”.

Each of those attributes; how it walks, how it sounds, how it looks – they are all examples of different “features” and the value of each feature will help us (or our machine learning system) decide whether the object we are looking at is a duck or not.

Every type of object has its own set of features and different instances of each type of object will have different values for those features. All ducks may swim and quack, but some ducks are bigger than others and have different colored plumage.

A common example used in machine learning courses is that of predicting a house price and it’s an example that will be useful here too. Houses have countless different attributes: how old is it? how many bedrooms does it have? what is the total size? does it have a garden? what colour is the front door? what are the local schools like? is it well maintained? etc. By aggregating the values of each of those attributes (or features) into list (or a vector), we have a way of telling our algorithm about the characteristics of our house and all the other houses we may have an interest in.

However, the different features of a house will have a different impact on its value.

Not all Features are Equal

The size of a house will arguably have the most impact, whereas the quality of the local schools may only be important for those buyers with families of school age and consequently that feature may have less impact on value. The colour of the front door will have virtually no impact at all.

So, while a subject may have many, many features not all of them are relevant to a given problem. It is important that your machine learning system uses features that discriminate between the different states that you are looking for, which in turn depends upon the question you are asking of your machine learning system.

The process of selecting the most appropriate features for any given problem is a process called “feature selection” or “feature engineering”.

Now, What Question am I Trying to Answer?

Feature engineering gives us a way of describing our subjects to an algorithm, but what are we actually trying to do? What is the question we are asking of our system?

There are two types of question that machine learning systems attempt to answer, questions like “is it a duck?” or more generally, “what is it?” and questions about size, for example, “How big is it?” or “how much is a house worth?”.

Questions about size produce answers that have what is called a continuous distribution, where the value can lie anywhere between some practical constraints. This class of problem is called a “regression” problem. Trying to predict a house price, a stock price, the size of a crop yield, or the capacity of a new data centre are all examples of regression problems.

Regression problems are solved using supervised machine learning techniques, because a set of values (or labels) are required upon which to base the prediction. Returning to our house price example; we have a set of different houses and a set of features for each house – we know how big each house is, how big its garden is, where it is located and maybe even the colour of its front door, we also have a label, specifically, we know how much each house is worth.

Now at some point in all of our experiences, whether it was in a math class or in our work-lives, there is a good chance that you will have plotted a graph of some data and then fitted a line of best-fit to that data. So, if we have a graph that shows how the value of a house changes with its size and there is a relationship between those two attributes, then a simple curve-fitting exercise will allow us to estimate the price of another house based only on its size.

For many people, that doesn’t feel much like machine learning. If you do a search on an internet Q&A site, there is much debate about whether it is or isn’t, as it certainly doesn’t have a wow-factor in the same way that say automatic translation or auto-captioning an image does. But recall the definition of machine learning from our first post – machine learning is a technique that allows a machine to make a decision on data it hasn’t seen before. Whether there is a wow-factor or not doesn’t matter, the techniques used in curve-fitting, techniques such as “linear-regression”, even though they are very simple, form the basis of numerous algorithms in machine learning and is a fundamental component of the data scientist and machine learning engineers’ toolkit.

In contrast to regression, the answer to a “what is it?” type question will come from a set of categories rather than a continuous range. In the machine learning world, the “what is it?” type questions can be handled in a number of ways, depending upon what we want to achieve and the data we have available. Questions of this type can be answered by both supervised and unsupervised techniques, but the best approach depends upon the specifics of your question.

In the area of supervised learning the “what is it?” question is called a “Classification” problem and the system that is used to answer those questions is called a classifier. In the world of unsupervised learning the “what is it?” question is a “clustering” problem and the system used to answer those questions is sometimes called a clustering engine.

Classification vs Clustering

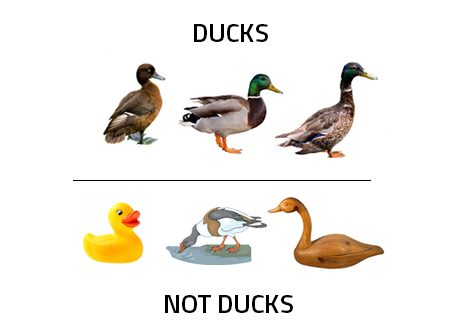

Let’s explore that distinction in more detail. Classification aims to define the best category that an object fits into given a predefined set of possible options. Does the image contain a face, or not? Is that animal a duck, or not? These are examples of what is called “Binary Classification”, because there are only two-categories to choose from: “Duck” and “Not Duck” or “there is a face” and “there is not a face”.

There are also classification problems where there are multiple categories, systems that can handle these questions are what are called “multi-class” classifiers; is that animal a duck, or a dog or a horse? In our first post we very briefly described Optical Character Recognition, a machine learning technique that tries to “read” text in images, if we use english text (and for simplicity ignore upper/lower case characters and punctuation marks) then each character will be one of either 26 letters or 10 digits and so OCR becomes a 36-class classification problem.

The fundamental difference between classifiers and clustering engines is that in clustering, the groups into which something is assigned are unknown in advance and are determined entirely by the patterns in the data. Clustering algorithms take a set of objects and split them into groups, or clusters, where everything in each cluster, is similar to everything else in that cluster, but different from items in other clusters.

As a generic example, let’s say we are trying to create a system that can recognize different animals and I have two systems, a supervised machine learning approach (a classifier) and an unsupervised approach (a clustering engine). We will also assume that the classifier has been well trained and produces accurate results. Now, if my collection contains multiple different animals such as ducks, dogs and horses, and equal numbers of each, and I present that collection to my classifier, it would correctly recognise each one and assign it to the appropriate category.

Similarly, if I presented that collection to a clustering engine and I had chosen my features well, I may also expect it to split the collection into 3 clusters, 1 for each animal. Importantly though the unsupervised system would be unable to label those clusters as no-one has told it what each cluster represents. It just knows that each cluster contains similar things.

But… if I change my data so the collection of animals contains only ducks, then the two systems start to behave very differently. The supervised system, the classifier, will still be able to say that each animal is a duck. It doesn’t care that every example has the same label. It just compares the features of the animal it has been presented with against the features of everything it has previously been told is a duck and tries to determine if there is a good enough match.

However, the unsupervised system, the clustering engine, which is looking for patterns within the data it has been presented with is now looking for patterns only within that set of ducks. Many of the features of a duck which will help to distinguish it from other animals, (does it have feathers? does it have webbed feet? does it quack?) will have the same value for every duck and so our clustering engine will ignore those features. The clustering engine tries to find patterns in all the other features it has been given. So if those other features include the animal’s colour and how big it is, the clustering engine may well split the collection into different coloured ducks or ducks of different sizes.

These differences in behaviour highlight the strengths and weaknesses of both approaches. Supervised systems need to know up-front what they are looking for and they need to be trained to look for those categories.Those activities take time, but the advantage is that a duck will always be a duck. Unsupervised techniques will look for hidden patterns in your data, the “unknown unknowns” if you will, and if your data changes then your patterns will change too.

So if you know that you are simply trying to identify whether an animal is a duck or not, an unsupervised system probably won’t give you the answer you expect. But if you want to find groups of similar ducks, groups of big ducks and little ducks, or white ducks and yellow ducks, then clustering techniques are the way to go.

Clustering, regression and classification are not the exclusive right of duck identification systems and house price predictors, these problems exist everywhere and the world of IT operations is no exception. In the next post we will start to look at some of the problems encountered in IT operations and how we can apply machine learning to solve them.